*AI images used for illustrative purposes only.

Designed for Digital, Used on Paper

At McGraw Hill Education, the platform included a scoresheet feature that automatically graded online quizzes, tests, and homework.

While this worked well for older students, it posed a challenge for K5 teachers. Many of them used the platform to create tests/quizzes and print them out for children to take by hand. They auto-grading feature wasn't something they could do.

After collecting the completed assignments, teachers had to manually input scores into the scoresheet to access platform-generated reports. Without doing this, they couldn't view performance data or identify areas for remediation.

The process was lengthy, teachers had to enter scores for each question, for every student, which made it time-consuming and cumbersome.

Making Manual Grading Less Painful

So how could we make inputting tests scores less of a headache?

One early idea was to have teachers upload a spreadsheet into the system and it would auto-populate the online scoresheets. It sounded like a good idea since most teachers put scores in spreadsheets anyway.

Stakeholders agreed with this approach, but having the option to upload spreadsheets was out of scope especially for the initial version. Here were the requirements for the project:

- Have faster way for teacher to bulk input multiple scores for multiple students

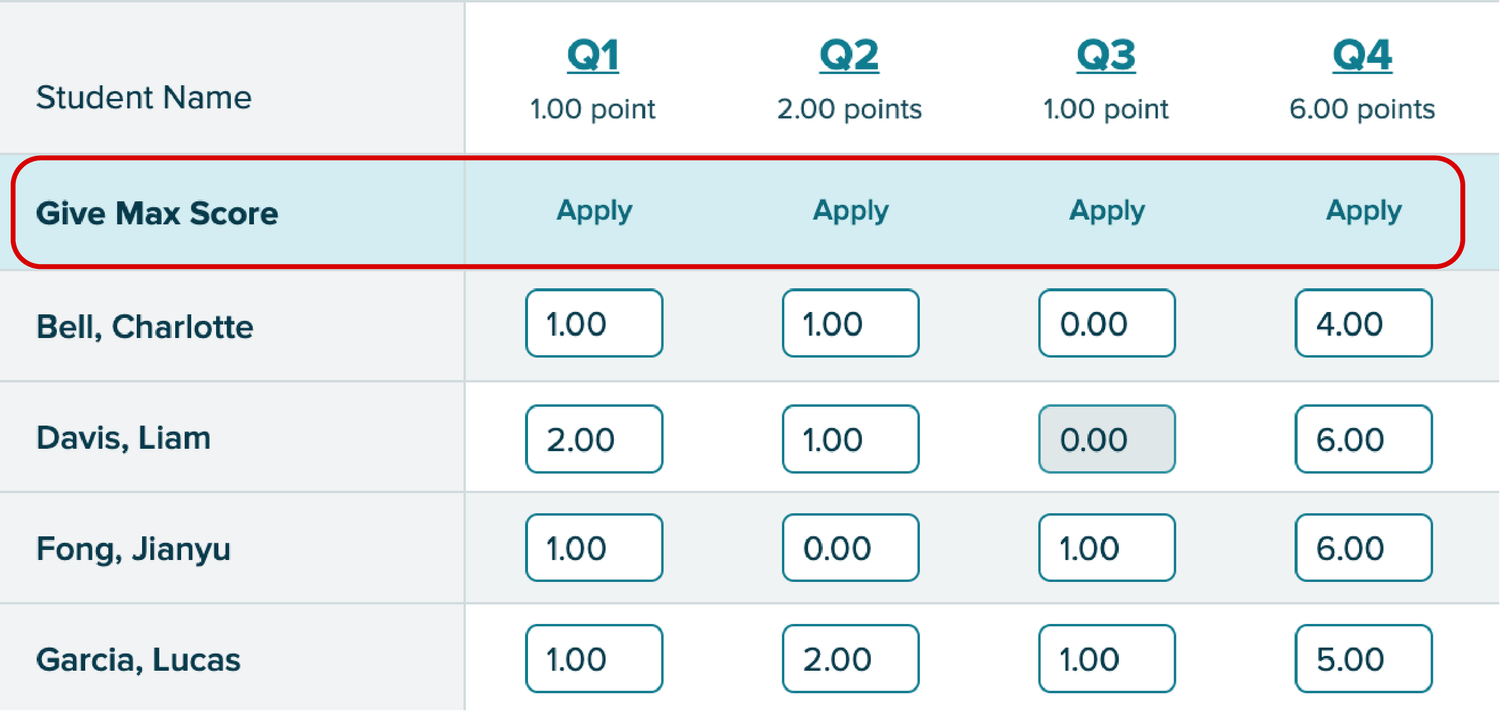

- Have an "Apply All" option where teacher can assign scores for all students on the same question in cases where most (or all students) get the same score

- Allow teacher to give more than 100% of a score (ie for extra credit)

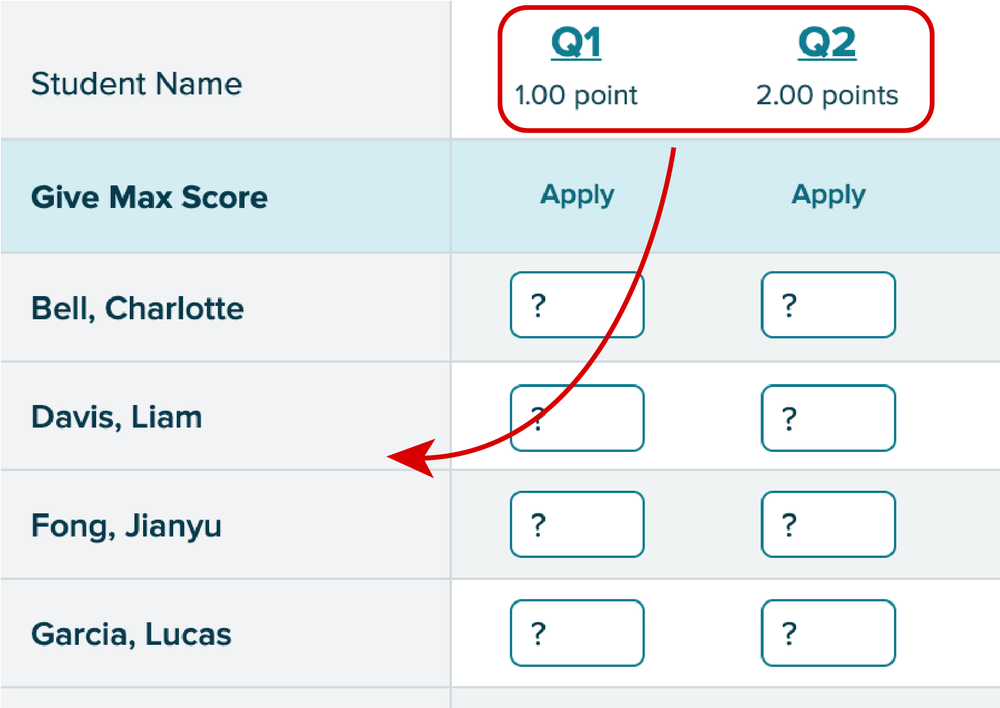

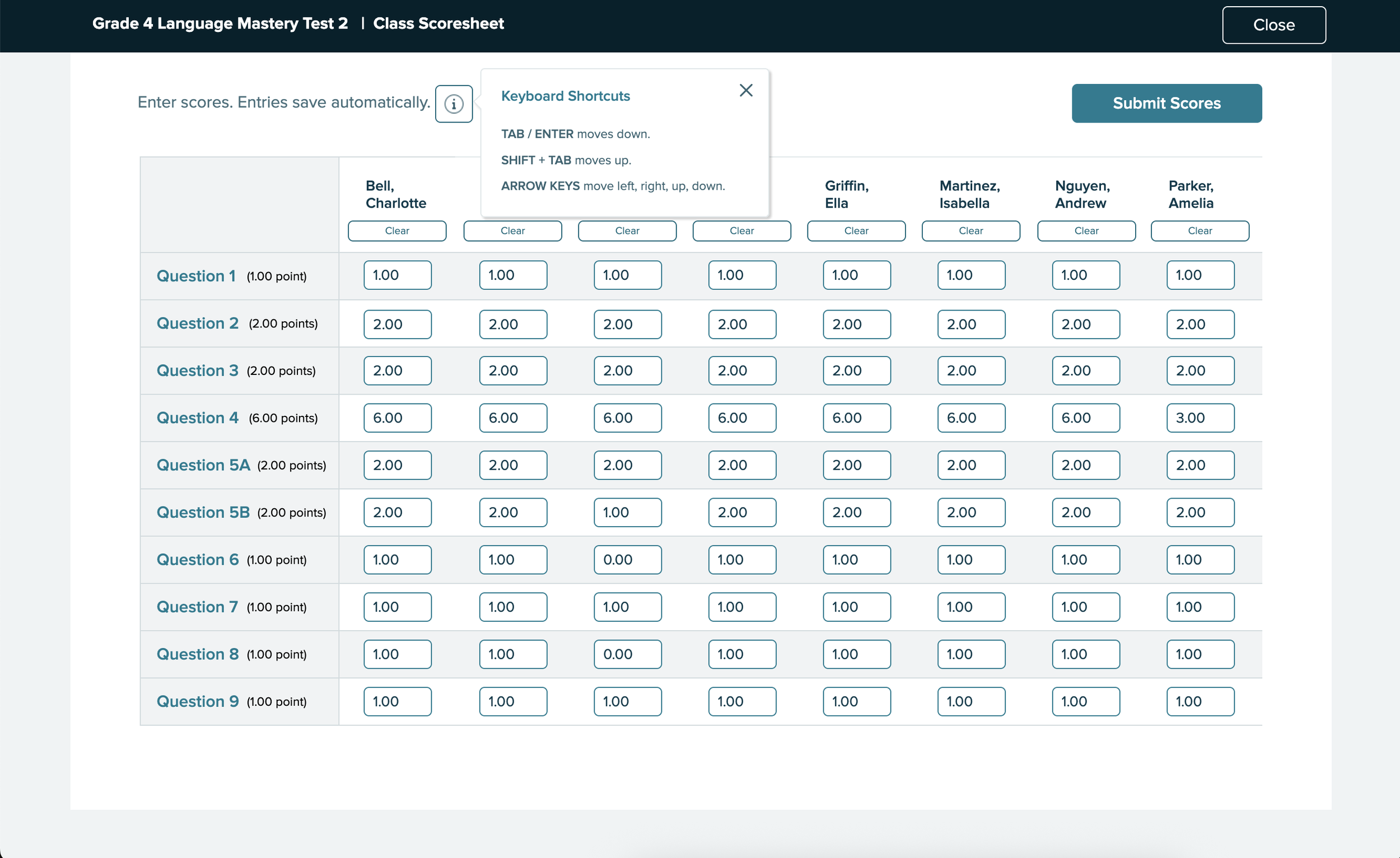

I began with low-fidelity wireframes, focusing on simplifying score entry across a full class. My initial design approach drew inspiration from a spreadsheet-style layout, similar to Excel, to make bulk scoring intuitive and familiar.

The spreadsheet view offered a quick overview of the entire class, making it easy to scan and compare scores. However, with a large number of students or questions, it could became overwhelming and hard to navigate.

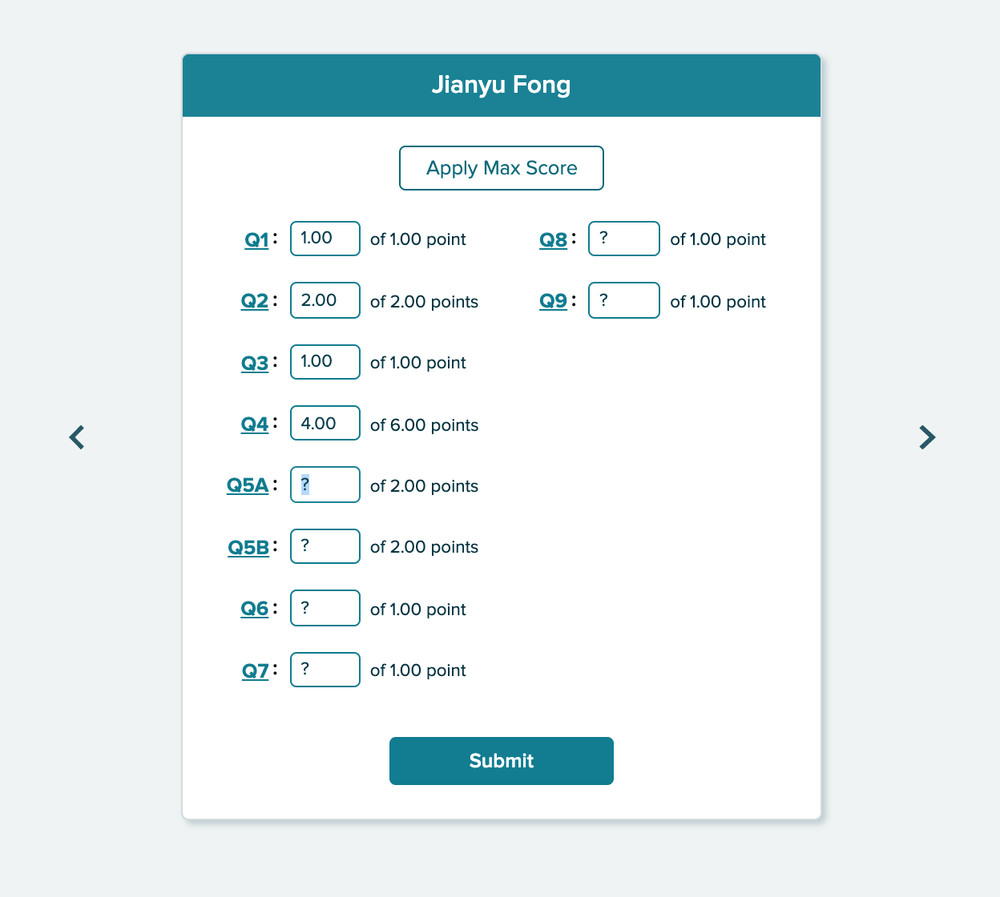

To address this, I created an alternative design focused on the individual student. This view allowed teachers to enter scores one student at a time

Both designs had merit, and stakeholders agreed there wasn't a clear winner upfront. We decided to test both the class-level spreadsheet view and the individual student view to better understand which approach teachers preferred in real-world scenarios.

Putting the Designs to the Test

Catching a Core Issue Early

Before starting formal user testing, our research team conducted a pilot session with a K5 teacher. While observing, I noticed a major design issue with the spreadsheet view: the participant struggled to input scores question-by-question within the columns.

"It would be nice if it [the design] was going down vertically here as well, instead of horizontally, it would just looking up and down on here. It's almost harder for me to go up and down and then track horizontally."

Teachers grading paper assignments typically work student-by-student rather than question-by-question. The original spreadsheet design conflicted with this mental workflow, so the participant preferred the second, individual-student-focused design. Based on this insight, I revised the designs to place the questions in a fixed left-side column, with student names displayed across the top.

Our Testing Approach

Our research team recruited seven K5 instructors who had experience with two of our major K5 products, Reading Mastery and Reading Wonders. All were familiar with the existing online scoresheet.

We presented both the spreadsheet design (Design A) and the student-by-student design (Design B).

To simulate how teachers grade printed assignments, we provided participants with PDFs of three scored tests. We asked them to have the PDFs open on a second screen (or printed out) so they could reference them while entering scores for the first three students.

What Worked and What Didn't

Spreadsheet Design (Design A)

- Participants appreciated keyboard shortcuts (Tab and Enter) for efficient score entry, though some did not know these existed, indicating a need for improved discoverability or instructional copy.

- Design A performed well overall, but the "Submit Assignment" button received mixed feedback. While batch submission was valued, participants unanimously requested the ability to exclude absent or excused students.

- The "Apply" button (which auto-fills full credit for all questions) consistently confused users. Once explained, participants appreciated the functionality, but the feature lacked intuitiveness.

"I do like the format of this and makes it easy to put in and I imagine it would be even faster if I wasn't necessarily looking at a scanned document like toggling back and forth. I think that would be easier."

"So what I liked about it is that I clicked on it. Put a number, enter. Next one number enter number enter number entry, like it was it was quicker. It was a lot easier."

"The Apply button...I would think would just be to lock the score before you submit it. But I honestly visually. I just as you can see, I totally skipped over that."

"Yeah, I like that. I just didn't even see that there where it says give max for but now that you pointed that out to me it makes perfect sense."

Student-by-Student Design (Design B)

Participants found Design B easy to use for inputting scores, and the "Apply" button was much clearer in this layout.

However, there were some trade-offs:

- Many participants preferred seeing all students at once, which Design B didn't allow.

- Several noted that since graded assignments are rarely in alphabetical order, they would need to scroll back and forth frequently to match names, which could slow them down.

"I honestly almost even like this better from a type A personality perspective, because if I've got Lucas in front of me. Then I'm just looking at his score sheet. And I don't feel like I have all the other names and everything around me. But I mean, I do like both."

"It's hard to say. I like the previous one better, that we just submitted...and I think that I liked on that you just start at the top and you just go down to input all the scores."

Refining the Details

Out of 7 participants, 5 preferred Design A and 2 preferred Design B. When asked how the designs could be improved, participants suggested:

- The ability to mark students as absent or exempt

- Displaying overall scores or averages

- Using color coding to highlight high and low scores

- Showing the standards or skills linked to each question to help guide remediation

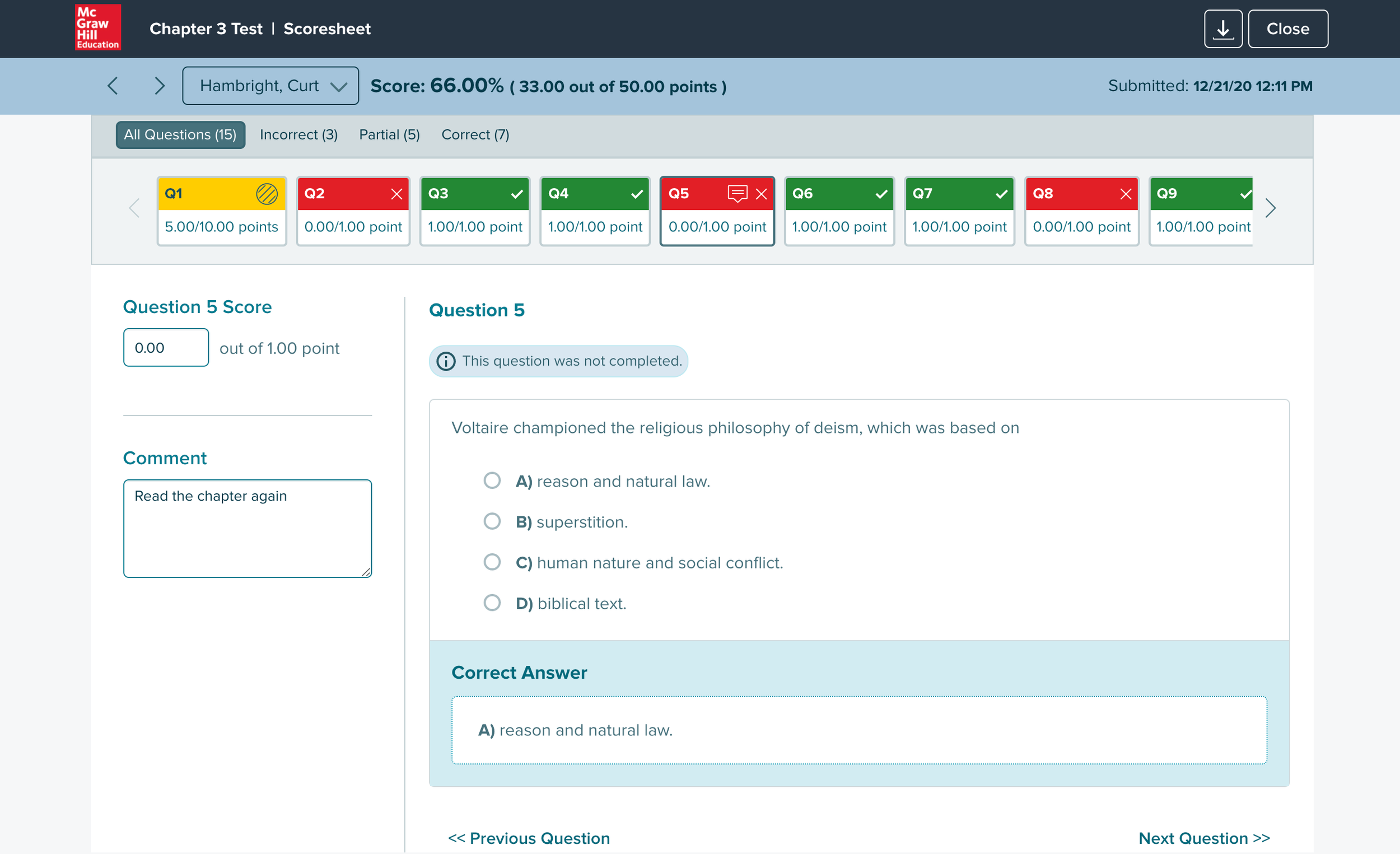

Based on the feedback, I decided to move forward with Design A and made the following changes:

- Renamed and styled the "Apply" button for clarity

- Added an info icon to explain keyboard shortcuts

- Allowed teachers to exclude students from submission

- Changed "Submit Assignment" to "Submit Scores"

- Added indicators showing which scores were submitted

The final version after multiple rounds of user testing:

Designing for Real Pain

One of the most surprising takeaways from this project was how excited users were about the prototype. I hadn't fully realized how frustrating the current process was for them, and it was rewarding to see how the new design helped ease that pain.

Here are a few things users said during testing:

"Um, I just want to say thank you for changing this because last year was, it like I...would I would do this on a weekend because it would take so long."

"A definite improvement over what we've had, I think what we've had not only isn't that easy to use, but I think it looks really outdated, which turns people off."

The Class Scoresheet was never officially launched due to shifting priorities and limited resources. Despite the challenges, especially last-minute scope changes and time constraints, I found the problem-solving process for the Class Scoresheet rewarding. It was a unique use case, and while the work was cut short, I'm confident the design addressed real user pain points and improved the overall experience.